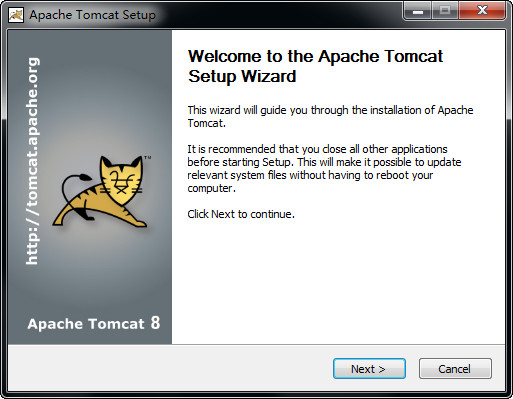

I shall denote the apache installed directory as $APACHE_HOME. I shall assume that Apache is installed in directory " d:\myproject\apache", and runs on port 7000. I will only describe the JK1.2 module with Apache 2 here. There are a few adapter modules available, such as Apache JServ Protocol (AJP) v1.2 "JServ" module (outdated), AJP v1.3 "JK 1.2" module (in use) and "JK 2" module (deprecated). If so, it lets the adapter takes the request and forwards it to Tomcat, as illustrated below.

In this combination, Tomcat executes the Java servlets and JSPs, the Apache serves the static HTML pages and performs other server-side functions such as CGI, PHP, SSI, etc. Tomcat can also be run as an add-on to the Apache HTTP Server (or Microsoft IIS) - as the Java servlet/JSP container. Tomcat can be run as a standalone server.

#APACHE TOMCAT BAND HOW TO#

I was specifying that the architecture was taking these concepts into account and getting low latency on each request in a high throughput way by not blocking on writes, precaching info in server memory, etc.Apache 2 with Tomcat 6 How To Configure Tomcat to work with Apache How to Connect Tomcat 6 to Apache HTTP Server 2 If you are waiting a bunch of ms for multiple network calls and also a db write within a coroutine, your latency is going to go up and you might not hit your latency requirements. However, even with not blocking the event loop, you need to have optimal IO _given a coroutine_. If you block the event loop you can get catastrophic behavior, like your entire server acts as if it's a single threaded synchronous runtime. However, I'll elaborate on my point and why it's not just "use event loop". In other words, having 32/64 concurrent threads you won't get the throughput you want so agree there. I agree that it matters in that you're much more likely or even able to hit this throughput by using an event loop runtime. Tomcat also has some strange behavior with the sock file, you kinda have to manage it on your own which is strange, but it could be done within the startup scripts. We set this up and it is _really really really_ fast, but we'd be on our own for support since nobody else is really doing it. ) and have TCP terminated with HaProxy ( ). I think the ultimate in throughput would be to have Tomcat listen on a unix domain socket (This is actually supported in Tomcat(. I do think if you wanted to stretch past 15k req/s you probably do need more exotic programming models like Vert.X, but that does come at complexity. I'm fairly certain we could hit 15k req/s per node with plain Tomcat if we actually tried. We really didn't push it much farther because that exceeds our needed capacity. Our Tomcat servers don't see 15k requests/s, but our load testing has shown a pair of them is good up to 3k/s (each) on dual-core 2gb servers with 512mb heaps. Dual cores pretty much quadruples the throughput to the point where SSD latency is the problem. They do reach a cliff edge though on single cores. Our ActiveMQ brokers running on a single-core, 1gb, Digital Ocean node easily push 5k messages/s through ~200 queues and another several thousand/s through a couple hundred topics.

0 kommentar(er)

0 kommentar(er)